Python libraries are a fun and easy way to begin learning and using Python for SEO.

A Python library is a collection of useful functions and code that allows you to perform a variety of tasks without having to write the code from scratch.

Python has over 100,000 libraries that can be used for everything from data analysis to creating video games.

This article contains a list of libraries that I have used to complete SEO projects and tasks. They’re all beginner-friendly, and there’s plenty of documentation and resources to help you get started.

Why Are Python Libraries Beneficial in SEO?

Each Python library includes functions and variables of various types (arrays, dictionaries, objects, and so on) that can be used to perform various tasks.

They can be used to automate certain tasks, predict outcomes, and provide intelligent insights in SEO, for example.

Although working with plain Python is possible, libraries can be used to make tasks much easier and faster to write and complete.

Python Libraries for SEO Projects

Python libraries are useful for SEO tasks such as data analysis, web scraping, and visualizing insights.

This is not an exhaustive list, but these are the libraries that I use the most for SEO.

Pandas is a Python library that allows you to work with table data. It enables high-level data manipulation with a DataFrame as the primary data structure.

DataFrames are similar to Excel spreadsheets in that they do not have row and byte limits, but they are also much faster and more efficient.

The best way to get started with Pandas is to take a simple CSV of data (for example, a crawl of your website) and save it as a DataFrame within Python.

Once this is saved in Python, you can use it to perform a variety of analysis tasks such as aggregating, pivoting, and cleaning data.

For example, if I have a full crawl of my website and want to extract only the indexable pages, I will use a built-in Pandas function to include only those URLs in my DataFrame.

import pandas as pd

df = pd.read_csv('/Users/rutheverett/Documents/Folder/file_name.csv')

df.head

indexable = df[(df.indexable == True)]

indexable

Requests

Requests are the next library, and it is used to make HTTP requests in Python.

Requests make a request using various request methods such as GET and POST, with the results stored in Python.

A simple GET request of a URL will print out the page’s status code as an example of this in action:

import requests

response = requests.get('https://www.deepcrawl.com') print(response)

You can then use this result to build a decision-making function, where a 200 status code indicates that the page is available but a 404 indicates that the page cannot be found.

if response.status_code == 200:

print('Success!')

elif response.status_code == 404:

print('Not Found.')

You can also use different requests, such as headers, to display useful information about the page, such as the content type or the amount of time it took to cache the response.

headers = response.headers print(headers) response.headers['Content-Type']

There is also the option to simulate a specific user agent, such as Googlebot, to extract the response that the bot will see when crawling the page.

headers = {'User-Agent': 'Mozilla/5.0 (compatible; Googlebot/2.1; +http://www.google.com/bot.html)'} ua_response = requests.get('https://www.deepcrawl.com/', headers=headers) print(ua_response)

Soup is lovely

Beautiful Soup is a data extraction library for HTML and XML files.

Fun fact: The BeautifulSoup library was named after Lewis Carroll’s poem from Alice’s Adventures in Wonderland.

BeautifulSoup, as a library, is used to make sense of web files and is most commonly used for web scraping because it can transform an HTML document into different Python objects.

For example, you can take a URL and use Beautiful Soup in conjunction with the Requests library to extract the page title.

from bs4 import BeautifulSoup import requests url = 'https://www.deepcrawl.com' req = requests.get(url) soup = BeautifulSoup(req.text, "html.parser") title = soup.title print(title)

BeautifulSoup also allows you to extract specific elements from a page using the find all method, such as all a href links on the page:

url = 'https://www.deepcrawl.com/knowledge/technical-seo-library/'

req = requests.get(url)

soup = BeautifulSoup(req.text, "html.parser")

for link in soup.find_all('a'):

print(link.get('href'))

Putting It All Together

These three libraries can also be used in tandem, with Requests being used to make the HTTP request to the page from which BeautifulSoup will extract information.

The raw data can then be transformed into a Pandas DataFrame for further analysis.

URL = 'https://www.deepcrawl.com/blog/'

req = requests.get(url)

soup = BeautifulSoup(req.text, "html.parser")

links = soup.find_all('a')

df = pd.DataFrame({'links':links})

df

Seaborn and Matplotlib

Python libraries for creating visualizations include Matplotlib and Seaborn.

You can use Matplotlib to create a variety of data visualizations such as bar charts, line graphs, histograms, and even heatmaps.

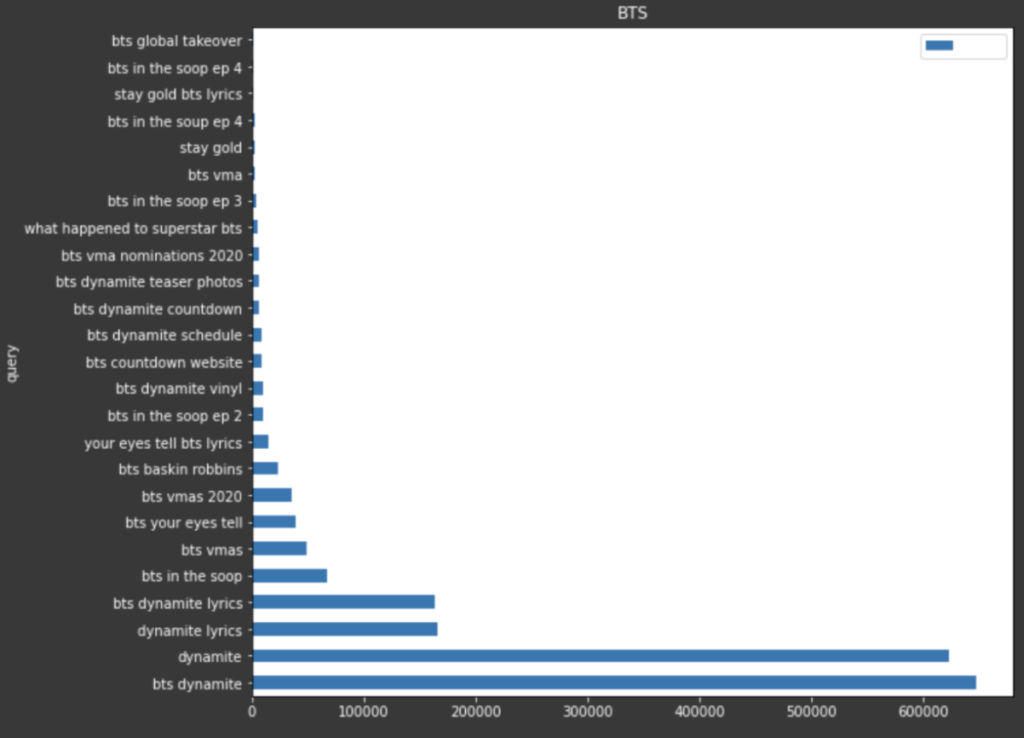

For example, if I wanted to use Google Trends data to display the most popular queries over 30 days, I could use Matplotlib to create a bar chart to visualize all of this.

Seaborn, which is based on Matplotlib, adds additional visualization patterns such as scatterplots, box plots, and violin plots to the line and bar graphs.

It differs from Matplotlib in that it has fewer syntax options and built-in default themes.

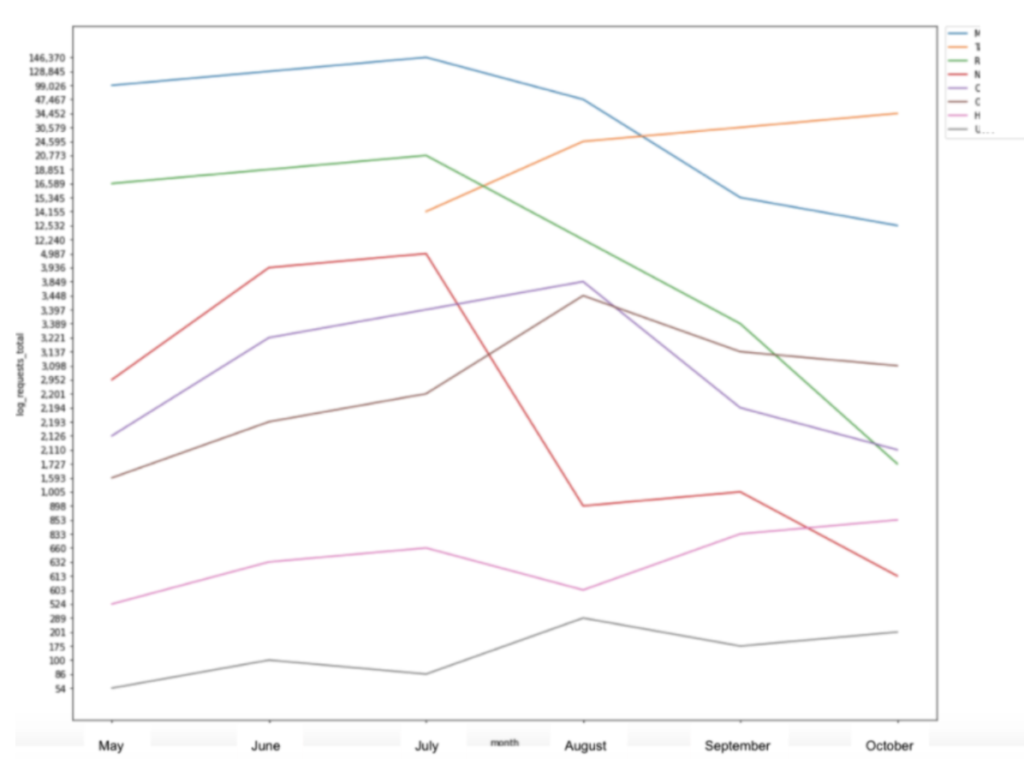

I’ve used Seaborn to make line graphs to visualize log file hits to specific segments of a website over time.

This example uses data from a pivot table, which I created in Python using the Pandas library, and is another example of how these libraries work together to create an understandable picture from the data.

Read Business Rules to Follow For Your Success.

Advertools

Advertools is a library created by Elias Dabbas that can be used to help SEO professionals and digital marketers manage, understand, and make decisions based on data.

Sitemap Analysis

This library enables you to perform a variety of tasks, such as downloading, parsing, and analyzing XML Sitemaps to extract patterns or determine how frequently content is added or changed.

Analysis of Robots.txt

Another interesting thing you can do with this library is to use a function to extract a website’s robots.txt file into a DataFrame, allowing you to easily understand and analyze the rules set.

You can also run a test within the library to see if a specific user agent is capable of retrieving specific URLs or folder paths.

URL Examination

You can also parse and analyze URLs with Advertools to extract information and better understand analytics, SERP, and crawl data for specific sets of URLs.

You can also use the library to split URLs to determine things like the HTTP scheme, the main path, additional parameters, and query strings.

Selenium

Selenium is a Python library that is commonly used for automation. Web application testing is the most common use case.

A script that opens a browser and performs several different steps in a defined sequence, such as filling out forms or clicking certain buttons, is a popular example of Selenium automating a flow.

Selenium follows the same logic as the Requests library, which we discussed earlier.

However, it will not only send the request and wait for a response, but it will also render the requested webpage.

To get started with Selenium, you’ll need a WebDriver to interact with the browser.

Each browser has its WebDriver; for example, Chrome has ChromeDriver and Firefox has GeckoDriver.

These are simple to download and integrate into your Python code. Here’s a helpful article with an example project that explains the setup process.

Scrapy

Scrapy is the final library I wanted to discuss in this article.

While we can use the Requests module to crawl and extract internal data from a webpage, we must also combine it with BeautifulSoup to pass that data and extract useful insights.

Scrapy enables you to do both of these in a single library.

Scrapy is also significantly faster and more powerful, completing crawl requests, extracting and parsing data in a predefined order, and allowing you to shield the data.

Within Scrapy, you can specify the number of parameters, such as the name of the domain you want to crawl, the start URL, and which page folders the spider is or is not allowed to crawl.

Scrapy, for example, can be used to extract all of the links on a specific page and store them in an output file.

class SuperSpider(CrawlSpider):

name = 'extractor'

allowed_domains = ['www.deepcrawl.com']

start_urls = ['https://www.deepcrawl.com/knowledge/technical-seo-library/']

base_url = 'https://www.deepcrawl.com'

def parse(self, response):

for link in response.xpath('//div/p/a'):

yield {

"link": self.base_url + link.xpath('.//@href').get()

}

You can take this a step further by following the links found on a webpage to extract information from all the pages that are linked to from the start URL, similar to how Google finds and follows links on a page.

from scrapy.spiders import CrawlSpider, Rule

class SuperSpider(CrawlSpider):

name = 'follower'

allowed_domains = ['en.wikipedia.org']

start_urls = ['https://en.wikipedia.org/wiki/Web_scraping']

base_url = 'https://en.wikipedia.org'

custom_settings = {

'DEPTH_LIMIT': 1

}

def parse(self, response):

for next_page in response.xpath('.//div/p/a'):

yield response.follow(next_page, self.parse)

for quote in response.xpath('.//h1/text()'):

yield {'quote': quote.extract() }

Learn more about these projects, as well as other examples, by clicking here.

Last Thoughts

“The best way to learn is by doing,” Hamlet Batista always said.

I hope that discovering some of the available libraries has inspired you to start learning Python or to expand your knowledge.

Contributions to Python from the SEO Industry

Hamlet also appreciated the Python SEO community’s resources and projects. I wanted to share some of the amazing things I’ve seen from the community to honor his passion for encouraging others.

Charly Wargnier has created SEO Pythonistas as a wonderful tribute to Hamlet and the SEO Python community he helped to cultivate. This site collects contributions of the amazing Python projects that those in the SEO community have created.

Hamlet’s invaluable contributions to the SEO Community are highlighted.

Moshe Ma-yafit wrote a fantastic script for log file analysis, which he explains in this post. It can display visualizations such as Google Bot Hits By Device, Daily Hits by Response Code, Response Code Percentage Total, and more.

Koray Tuberk GÜBÜR is working on a Sitemap Health Checker right now. He also co-hosted a RankSense webinar with Elias Dabbas, during which he shared a script for recording SERPs and analyzing algorithms.

It records SERPs with regular time differences, and you can crawl all the landing pages, blend data, and make some correlations.

John McAlpin published an article in which he explains how to spy on your competitors using Python and Data Studio.

JC Chouinard has written an in-depth guide to use the Reddit API. You can use this to do things like extract data from Reddit and post to a Subreddit.

Rob May is developing a new GSC analysis tool while also building a few new domain/real sites in Wix to compare to its higher-end WordPress competitor.

Need help with our free SEO tools? Try our free Website Reviewer, Online Ping Website Tool, Page Speed Checker.

Learn more from SEO and read An Introduction To Python & Machine Learning For Technical SEO